|

||||||||||||||||||||||

![Australia's First Online Journal Covering Air Power Issues [ISSN 1832-2433] Australia's First Online Journal Covering Air Power Issues [ISSN 1832-2433]](APA/APA-Title-Analyses.png) |

||||||||||||||||||||||

![Sukhoi PAK-FA and Flanker Index Page [Click for more ...]](APA/flanker.png) |

![F-35 Joint Strike Fighter Index Page [Click for more ...]](APA/jsf.png) |

![Weapons Technology Index Page [Click for more ...]](APA/weps.png) |

![News and Media Related Material Index Page [Click for more ...]](APA/media.png) |

|||||||||||||||||||

![Surface to Air Missile Systems / Integrated Air Defence Systems Index Page [Click for more ...]](APA/sams-iads.png) |

![Ballistic Missiles and Missile Defence Page [Click for more ...]](APA/msls-bmd.png) |

![Air Power and National Military Strategy Index Page [Click for more ...]](APA/strategy.png) |

![Military Aviation Historical Topics Index Page [Click for more ...]](APA/history.png)

|

![Intelligence, Surveillance and Reconnaissance and Network Centric Warfare Index Page [Click for more ...]](APA/isr-ncw.png) |

![Information Warfare / Operations and Electronic Warfare Index Page [Click for more ...]](APA/iw.png) |

![Systems and Basic Technology Index Page [Click for more ...]](APA/technology.png) |

![Related Links Index Page [Click for more ...]](APA/links.png) |

|||||||||||||||

| Last Updated: Mon Jan 27 11:18:09 UTC 2014 | ||||||||||||||||||||||

|

||||||||||||||||||||||

|

||||||||||||||||||||||

![Australia's First Online Journal Covering Air Power Issues [ISSN 1832-2433] Australia's First Online Journal Covering Air Power Issues [ISSN 1832-2433]](APA/APA-Title-Analyses.png) |

||||||||||||||||||||||

![Sukhoi PAK-FA and Flanker Index Page [Click for more ...]](APA/flanker.png) |

![F-35 Joint Strike Fighter Index Page [Click for more ...]](APA/jsf.png) |

![Weapons Technology Index Page [Click for more ...]](APA/weps.png) |

![News and Media Related Material Index Page [Click for more ...]](APA/media.png) |

|||||||||||||||||||

![Surface to Air Missile Systems / Integrated Air Defence Systems Index Page [Click for more ...]](APA/sams-iads.png) |

![Ballistic Missiles and Missile Defence Page [Click for more ...]](APA/msls-bmd.png) |

![Air Power and National Military Strategy Index Page [Click for more ...]](APA/strategy.png) |

![Military Aviation Historical Topics Index Page [Click for more ...]](APA/history.png)

|

![Intelligence, Surveillance and Reconnaissance and Network Centric Warfare Index Page [Click for more ...]](APA/isr-ncw.png) |

![Information Warfare / Operations and Electronic Warfare Index Page [Click for more ...]](APA/iw.png) |

![Systems and Basic Technology Index Page [Click for more ...]](APA/technology.png) |

![Related Links Index Page [Click for more ...]](APA/links.png) |

|||||||||||||||

| Last Updated: Mon Jan 27 11:18:09 UTC 2014 | ||||||||||||||||||||||

|

||||||||||||||||||||||

| Assessing

the Impact of Exponential Growth Laws on Future Combat Aircraft Design |

|||||||||||||||||||||||||||||||||||||||

|

|||||||||||||||||||||||||||||||||||||||

|

|||||||||||||||||||||||||||||||||||||||

|

F-22A Raptor, F-86H Saber, P-38J

Lightning and F-15E Strike Eagle. These four fighters are

characteristic of upper tier air superiority fighters built to

kinematically dominate opponents in close combat (U.S. Air Force image).

|

|||||||||||||||||||||||||||||||||||||||

|

|||||||||||||||||||||||||||||||||||||||

|

|

|||||||||||||||||||||||||||||||||||||||

|

|||||||||||||||||||||||||||||||||||||||

Introduction

|

|||||||||||||||||||||||||||||||||||||||

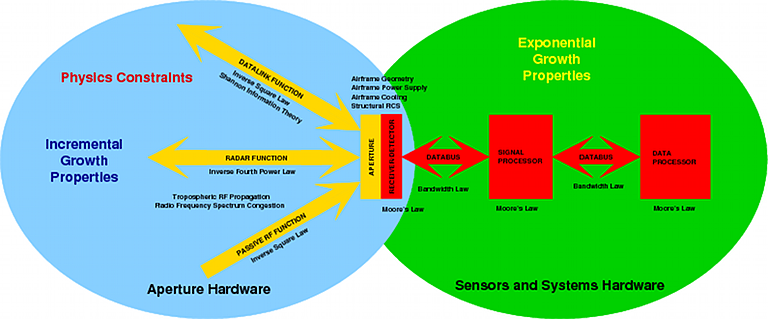

Evolutionary Considerations in Combat AircraftThe “technological evolution” of combat aircraft over the last century shows that technological achievement has always reflected a confluence of available basic technology and operational imperatives in intended capability. While the former has enabled specific capabilities, the latter has acted to effectively attrit less competitive technologies over time1. Perhaps the best case study of this effect has been the transition from reciprocating piston engine and propeller propulsion to the use of gas turbines. While reciprocating engines and propellers remain in use over a century since their introduction, they have been completely displaced in all mainstream designs. Hybrids, such as gas turbine powered propeller and propfan systems are employed in applications where low fuel burn at low and medium operating altitudes is the pivotal imperative. Turbofan engines have become the mainstay in mainstream applications, with specific design optimisations in bypass ratio and other key design parameters characterising specific design niches. In the language of evolutionary theory, the introduction of new designs by variation in existing basic technology or the application of new basic technology amounts to a mechanism not unlike “mutation” in biological evolution, but with the important caveat that an entity separate from the evolving entity provides reproduction through manufacture. Another important difference from biological systems is that operational aircraft can be “mutated” through modification throughout their operational life, such modifications encompassing changes to hardware and more recently, software. In biological evolution, “fitness” is some measure which describes the potential of a species to reproduce and thus propagate through time. In combat aircraft, reproduction amounts to continued manufacture or continued operation with ongoing modification to adapt the design to a changing environment. The analogy to biological extinction is withdrawal from service, as a result of attrition in combat or other causes which make continued production or operation of the design non-viable. Contemporary military aircraft are complicated systems, combining an airframe, propulsion system, control system, sensors, navigation and communication systems, weapon systems, and a range of supporting systems ranging from cooling to crew life support systems. The choice of specific technologies reflects a number of imperatives, some of which could be described as “short term” or “near term” imperatives, and some of which are “long term” imperatives. Short term imperatives may include the availability or popularity of certain components, materials or design strategies, while long term imperatives usually include qualities in combat and life cycle costs. In most combat aircraft the dominant short and long term imperative continues to be “combat effectiveness”, most often defined as some combined result of “lethality” and “survivability”. Broadly, the term “combat effectiveness” has been and continues to be used in many contexts, and thus cannot be said to be exactly defined, as definitions vary widely with context and application. Moreover many attempts to quantify combat effectiveness are subject to the cognitive bias of the observer or assessor attempting to do so2. The accepted practice in the definition of combat effectiveness is the conflation of combat effects and survivability, as seen in the common use of Loss-Exchange-Rates as a Measure of Effectiveness in air to air combat. This practice is not unique, considering the attempt to quantify combat effectiveness in the model introduced by Wang and Li, in which combat effectiveness E is defined as a linear combination of terms describing effectiveness in air to air and air to ground combat, with each term conflating measures of combat effect and survivability3. Conflating lethality and survivability is useful in many situations, but incurs some risks as it can often obscure specific limitations in a design which may have a dominant effect.  Figure 1. Mitsubishi A6M2 Zeke Model 22. A pivotal weakness of most Japanese fighters during the 1940s was the absence of design measures to reduce vulnerability such as armour plating and fuel system protection. When the nature of combat operations shifted from the offensive strategic manoeuvre to sustained attrition warfare in the Solomons campaign, catastrophic attrition of aircraft and pilots ensured. The Japanese design philosophy of neglecting vulnerability to maximise lethality and minimise susceptibility proved unsuccessful for the type of conflict these aircraft were employed in (U.S. Air Force image).  Figure 2. F-22A Raptor (2010) and P-47D Thunderbolt (1944). Both incorporate the best survivability measures available during their respective periods of development (U.S. Air Force image). An excellent historical case study of the decoupling of combat effect and survivability was the practice by Japanese combat aircraft designers, during the 1930s and 1940s, of sacrificing survivability measures such as armour and fire suppression to minimise weight and thus maximise lethality through best possible aerodynamic performance. This practice yielded good effectiveness in the early phase of the Second World War, where Japanese forces fought a high tempo offensive strategic manoeuvre campaign exploiting superior numbers, surprise and strategic mobility. Once the character of the conflict shifted to sustained attrition warfare, the survivability limitations of these designs resulted in catastrophic attrition losses in aircraft and personnel. Refer Figure 1.  Figure 3. Designed to survive defences by high speed flight at low altitudes and toss delivery of nuclear bombs, the F-105 suffered heavy losses when employed in dive bomb deliveries, using dumb bombs, during the South East Asian conflict (U.S. Air Force image). Another interesting case study of mismatched design optimisation and combat environment is the operational use of the Republic F-105 Thunderchief in the South East Asian conflict during the 1960s and early 1970s. Initially developed to deliver tactical nuclear weapons by toss bombing, through dense air defences, the design was optimised to evade hostile fighters and Surface Air Missiles by exploiting very high speed and low altitude flight. In South East Asia the aircraft was employed to deliver unguided conventional bombs, and suffered heavy losses primarily due to damage inflicted by low calibre low altitude Anti-Aircraft Artillery (AAA) fire, mostly during low altitude dive weapon delivery. Refer Figure 3.4. In the absence of a widely accepted definition of “combat effectiveness” the following definition will be applied: Combat Effectiveness = (the ability of the aircraft to produce the intended combat effect in a specific combat environment) (1) Combat effectiveness reflects in some fashion the effects of “survivability” and “lethality”. Survivability research is mature and survivability as a quantitative measure is well defined5. The definitions by Ball and Atkinson are most widely employed and provide a robust basis for both qualitative and quantitative analysis, cite6: “Aircraft combat survivability (ACS) is defined as the capability of an aircraft to avoid or withstand a man-made hostile environment. It can be measured by the probability the aircraft survives an encounter (combat) with the environment, PS.” Combat survivability in turn reflects three important measures, these being “susceptibility” , “vulnerability” and “killability”, cite: “Susceptibility is the inability of an aircraft to avoid (the guns, approaching missiles, exploding warheads, air interceptors, radars, and all of the other elements of an enemy's air defense that make up) the man-made hostile mission environment. The more likely an aircraft on a mission is hit by one or more damage-causing mechanisms generated by the warhead on a threat weapon (e.g. warhead fragments, blast, and incendiary particles), the more susceptible is the aircraft. Susceptibility can be measured by the probability the aircraft is hit by one or more damage mechanisms, PH. Thus, Susceptibility = PHVulnerability is the inability of an aircraft to withstand (the hits by the damage-causing mechanisms created by) the man-made hostile environment. The more likely an aircraft is killed by the hits by the damage mechanisms from the warhead on a threat weapon, the more vulnerable is the aircraft. Vulnerability can be measured by the conditional probability the aircraft is killed given that it is hit, PK|H. Thus, Vulnerability = PK|HKillability is the inability of the aircraft to both avoid and withstand the man-made hostile environment. Thus, killability is the ease with which the aircraft is killed by the enemy air defense. Killability can be measured by the probability the aircraft is killed, PK. Killability is given by the joint probability the aircraft is hit (its susceptibility) and it is killed given the hit (its vulnerability). Thus, PK = PHPK|HIf the threat weapon contains a high explosive (HE) warhead with proximity fuzing, the subscript H for a hit is replaced with an F for warhead fuzing.” The relationships between these measures are defined thus5: PS = 1 - PK = 1 - PHPK|H Survivability = 1 - Killability = 1 - Susceptibility • VulnerabilityMeasures to assess or quantify lethality in combat are much less well defined. While the measure of Probability of Kill, usually represented as PK or PKILL, is frequently employed in the context of weapons deployed against specific targets, the measure itself is a conflation of probabilities reflecting the design of various weapon components as well as the design of the delivery aircraft and its operational application. Other measures of lethality have been employed. The measure of “throw weight”, defined as the product of some normalised weapon payload and the range of the delivery system, has been widely employed for strategic weapon systems, including bomber aircraft. It is a good measure of combat utility for a bomber, and for a fleet of bombers, permitting cardinal comparisons between fleets. Its limitation is that it does not encompass a measure of effectiveness in killing targets, as the latter is effectively nulled by the normalisation of the weapon payload. Loss-Exchange-Rates (LER) are a relative measure of fighter aircraft lethality, but as they conflate survivability effects, they are not a true lethality measure. In assessing lethality, several important considerations apply:

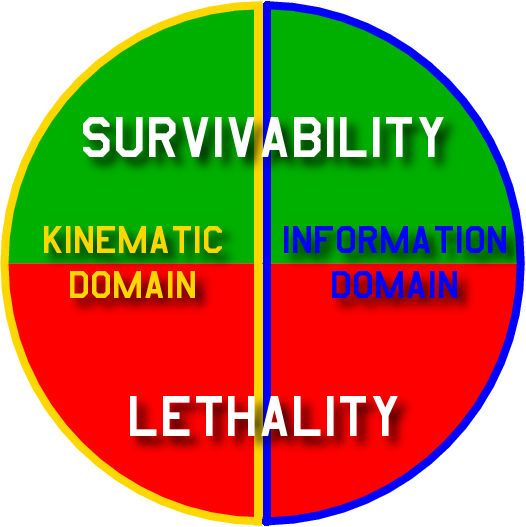

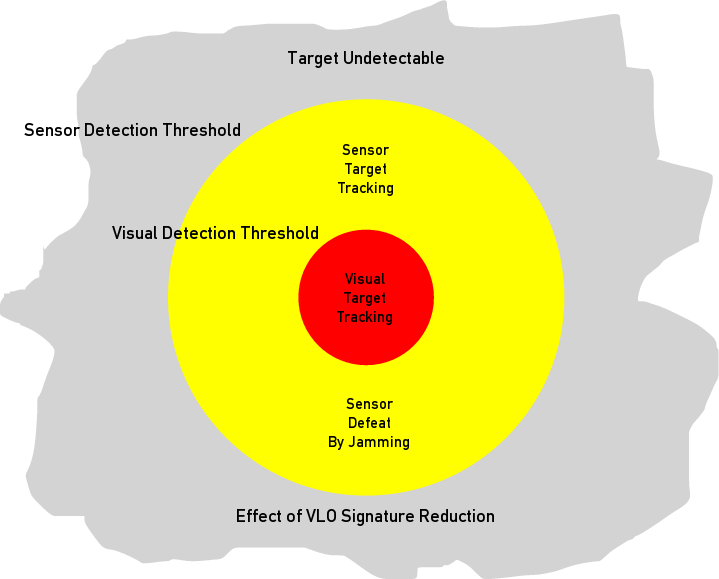

These considerations expose an important duality in combat aircraft airframe designs and sensor fits, which is that most often key functional capabilities and sensor suite components make important contributions to both lethality and survivability. Refer Figure 4.  Figure 4.

If we place airframe performance into a domain encompassing “kinematic domain capabilities” and sensor performance into a domain encompassing “information domain capabilities”, then both lethality and survivability are complex functions of the aircrafts' kinematic and information domain capabilities7. |

|||||||||||||||||||||||||||||||||||||||

Figure 5. Messerschmitt

Me-262A

Schwalbe. The Me-262A was the

first operational jet fighter, powered by a pair of Jumo 004 axial

turbojets. This aircraft kinematically defeated all Allied piston

engine fighters and was highly effective in combat. The immaturity of

its engines resulted in frequent hot

end failures, with this reliability problem resulting in frequent

combat losses (U.S. Air Force image).

|

|||||||||||||||||||||||||||||||||||||||

| A good example can be found in

systems such a geolocating

radio-frequency receivers, which are a potent defensive aid

permitting early detection and evasion of threats. The very same

sensor can be employed for precision targeting of guided weapons

against targets producing radio-frequency emissions. An analogous example is an Infrared Search and Track (IR&ST) system designed to acquire airborne targets, but potentially a valuable defensive sensor if it can detect missile motor ignition flares in air combat. Persistence at high speeds, subsonic or supersonic (i.e. supercruise), is another example, providing valuable capabilities in closing with targets or separating from threats, in both air-to-air and air-to-ground combat. The overlap or duality property in both lethality and survivability across aircraft capabilities in the kinematic and information domains has been and remains a source of confusion to many observers. Another important source of confusion is assessing aircraft lethality is the implicit dependency of lethality upon survivability. If the aircraft cannot survive before it can engage its target, its lethality will be zero. In a complex warfighting situation, aircraft with poor survivability will be lost more frequently than highly survivable designs, as a result of which the lethality of less survivable aircraft will be effectively reduced. What this means in quantitative terms, is that the probability of kill against an intended target is actually a conditional probability, thus: Pkill_opposed = Pkill_unopposed | Ps = Pkill_unopposed | (1 - PHPK|H ) Where Pkill_opposed is the probability that a kill can be achieved in an actual opposed threat environment, Pkill_unopposed is the probability that a kill can be achieved in an unopposed threat environment, both assuming probabilities of kill for some combination of aircraft and weapon, and finally the term (1 - PHPK|H ) represents susceptibility and vulnerability, as per the previous definitions by Ball and Atkinson. This representation does not consider the correlation between weapon success rates at release and any defensive play the aircraft may need to employ in an opposed environment, where such a play may impair weapon effectiveness. For instance, bomb deliveries and missile launches may be compromised by suboptimal kinematics at release. Therefore, a more accurate representation of the problem is thus: Pkill_opposed = Pkill_unopposed | Pshot_unimpaired | (1 - PHPK|H ) Where the term Pshot_unimpaired represents the probability that the weapon release(s) is(are) not impaired in an opposed environment. What this says in plain language is that an aircraft with poor survivability cannot be highly lethal in an opposed environment, even if it is highly lethal in an unopposed environment, such as a test range. Mills has represented these relationships in tabular form8:

In this representation, “productivity” is a measure of attrition inflicted upon an opponent, as a result of lethality and survivability. Highly survivable aircraft with good lethality produce much greater effect in combat and are thus more “productive” in attrition warfare. Another important observation is that selection mechanisms observed to attrit combat aircraft can vary widely between periods of peace and times of conflict. In conflicts or sustained strategic competition involving peer competitor nations or near peer competitor nations, the survival imperative results in rapid technological evolution which invariably selects for combat effectiveness against opposing capabilities. This selection mechanism has been observed repeatedly between the late 1930s and early 1990s. Periods in which there was no overt conflict between peer competitor nations or near peer competitor nations, and where there was a widely held belief that no strategic competition was under way, have been characterised by often wholly arbitrary choices in what aircraft are manufactured or maintained in operation. There is no evidence which substantiates the commonly held belief that acquisition or life cycle cost minimisation is the determining “peacetime selection mechanism” in combat aircraft under such conditions9. |

|||||||||||||||||||||||||||||||||||||||

Patterns of Evolution in Combat AircraftTechnological evolution in combat aircraft has displayed two distinct patterns over the last century. These have previously been labelled as “linear evolution” and “lateral evolution”10. Linear evolution is characteristically a process born of head to head contests between 'like' capabilities. The better performing of these like capabilities prevails, and the process is centred on established designs improving their performance in their core design optimisations. Lateral evolution is characteristically a process of a rapid adaptation to a rapidly changing environment. The demand for a new capability arises unpredictably, and the winner in the contest, all else being equal, is the player who can field the new capability quickest and thus secure a decisive advantage. Platforms with strong potential for lateral evolution can be adapted quickly and cheaply thus facilitating the process of rapid adaptation of the force structure to gain a decisive military advantage. A good parallel is a comparison against warfighting strategies. Linear evolution parallels a brute force attrition campaign, whereas lateral evolution parallels an agile manoeuvre campaign. More than often lateral evolution appears to be the more successful strategy as it pits a rapidly evolved strength against an opponent's basic weakness, not unlike a manoeuvre force punching through weak enemy defences. A careful historical study of combat aircraft yields numerous good case studies. Periods of war or intense military competition, such as arms races, are most illustrative due to the significant survival pressures driving rapid growth in military capabilities. |

|||||||||||||||||||||||||||||||||||||||

|

Linear Evolution

|

|||||||||||||||||||||||||||||||||||||||

The Second World War period presents some excellent examples, due to the large diversity of types built and operated by all participants. The RAF's Supermarine Spitfire and Luftwaffe's Messerschmitt Bf-109 were both in production at the outset and the end of the war. Both exhibited great capacity for linear evolution, and the 1945 variants of both types bore only a very basic resemblance to the 1939 models, with dramatic gains seen in performance. Of more interest however are case studies of lateral evolution. Three aircraft stand out in this sense, these being the RAF's DeHavilland Mosquito, the Luftwaffe's Junkers Ju-88 and the USAAC's Lockheed P-38. All were initially architected for single roles, the Mosquito and Ju-88 as fast bombers, the P-38 as an interceptor. By 1945 all three types had spawned a multiplicity of specialised derivatives. These types flew roles such as reconnaissance, night fighting, pathfinding strike, close air support, and anti-shipping strike. The Ju-88 even evolved into the Mistel “piggyback” cruise missile11. The protracted Cold War period also presents numerous interesting case studies. The F-111, devised as a supersonic low level nuclear bomber and naval interceptor, evolved into a range of conventional strike variants, a penetrating radar and communications jamming platform designated the EF-111A Raven, a reconnaissance aircraft in the RF-111C and a maritime strike aircraft. It was also employed as a platform for a sidelooking ground surveillance radar in the Pave Mover program. The F-4 Phantom II, developed initially as a naval interceptor, evolved into a fighter-bomber and spawned both a photoreconnaissance variant, the RF-4C, and a defence suppression variant, the F-4G Wild Weasel IV. The Soviet Tu-16 Badger was initially developed as a medium bomber, and spawned a wide range of derivatives including support jammers, chaff bombers, cruise missile carriers, electronic and photographic intelligence collection platforms, and radar reconnaissance platforms, with Chinese cruise missile carrier variants remaining in production at this time. Numerous other examples exist12. |

|||||||||||||||||||||||||||||||||||||||

|

Lateral Evolution

|

|||||||||||||||||||||||||||||||||||||||

Of interest are the factors which are common to designs which have exhibited strong lateral evolution. These clearly include highly competitive aerodynamic performance, structural design capable of adaptation and strong enough to absorb growth in weight and engine performance, load carrying ability and internal fuel capacity, and the internal volume to accommodate specialised mission systems, especially sensors. Distilling this down further, overall size relative to competing types appears to be the most common denominator in enabling lateral evolution. |

|||||||||||||||||||||||||||||||||||||||

Figure 6. The F-86 Saber

was the

dominant fighter in the Korean

War, the P-38 was a pivotal type during the Second World War,

while the F-4 Phantom II was dominant during the Vietnam conflict. All

four types were larger and in many respects kinematically superior to

their opponents (U.S. Air Force image).

Figure 7. Differential

evolutionary

rates in basic design

features.

|

|||||||||||||||||||||||||||||||||||||||

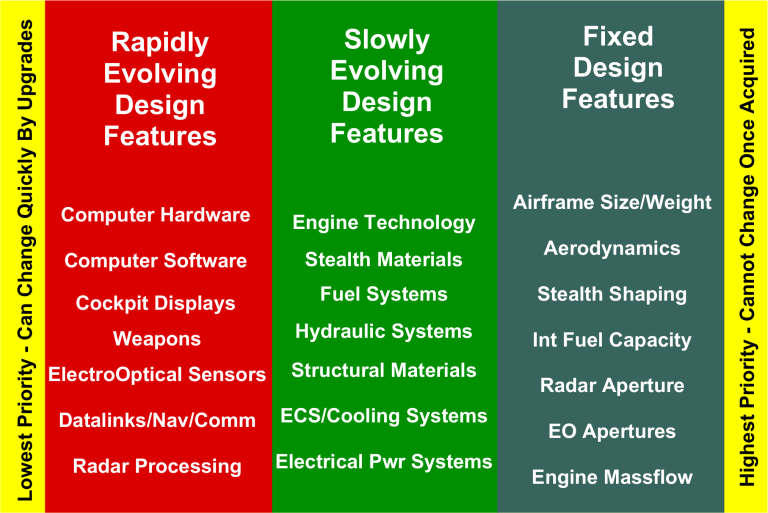

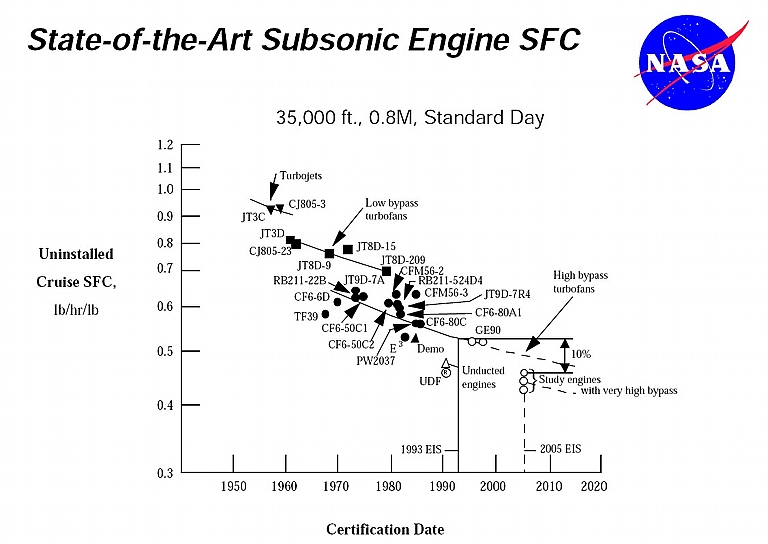

Rate of EvolutionAircraft comprise a range of components and subsystems, many of which are constructed using fundamentally different basic technologies. These technologies mostly evolve at different rates, reflecting basic differences in the physics and mathematics which determine the properties of each.  Figure 8. Evolution of

subsonic

engine TSFC performance (NASA).

Airframe aerodynamics, structures, engines, observables shaping and materials, power, cooling and life support subsystems, control systems and mission avionics all exhibit different rates of evolution, and a wealth of case studies exist over the past century. The greatest dichotomy in rates of evolution continues to be observed between basic technologies providing aircraft functions in the kinematic domain, versus basic technologies providing aircraft functions in the information domain. Refer Figure 7. A simple example can be found in comparing two fundamental measures of performance in key technologies falling into either domain. The Dry Thrust Specific Fuel Consumption (TSFC) is a key measure of efficiency in gas turbine engines. Over five decades of evolution this figure has improved by a factor of around 2:1. Over the same period the computational performance of computers, measured in Millions of Instructions Per Second (MIPS) has improved by many orders of magnitude. Refer Figure 8. This pattern is repeated across a great many other key technologies, with the principal divide being whether these technologies provide functions in either the kinematic domain or information domain. It cannot be any other way, as the basic technologies used to provide functions in the kinematic domain are constrained by Newtonian physics, thermodynamics, fluid dynamics and chemistry. Basic technologies used to provide functions in the information domain are constrained by quantum physics, relativity and electromagnetism, and limited by the speed of light. |

|||||||||||||||||||||||||||||||||||||||

Exponential Growth LawsBasic technologies employed in the information domain display, empirically, not only high rates of evolution over time, but in most instances display “geometric” or “exponential” growth properties over time13. Exponential growth behaviour will therefore persist in such technologies until one of two constraints is encountered:

These “laws” reflect empirical observations of growth in several key areas of basic technology, and therefore merit closer study. |

|||||||||||||||||||||||||||||||||||||||

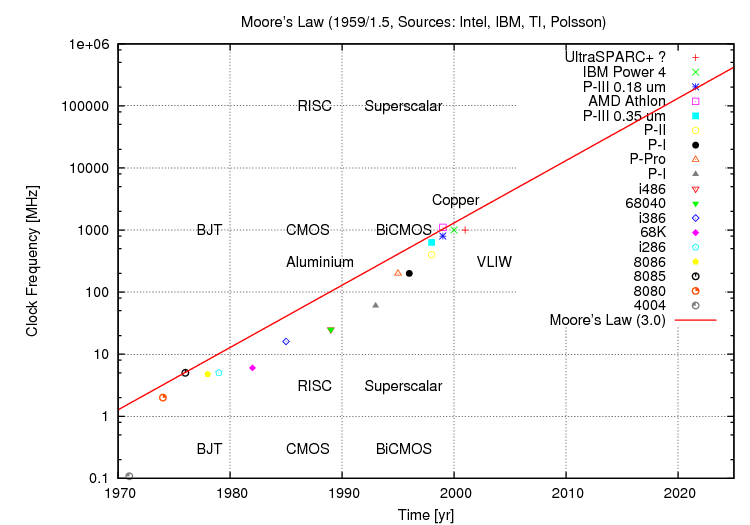

Figure 9. Moore's law for

density

(above) and clock frequency (below) between 1970 and 2001 (Author).

|

|||||||||||||||||||||||||||||||||||||||

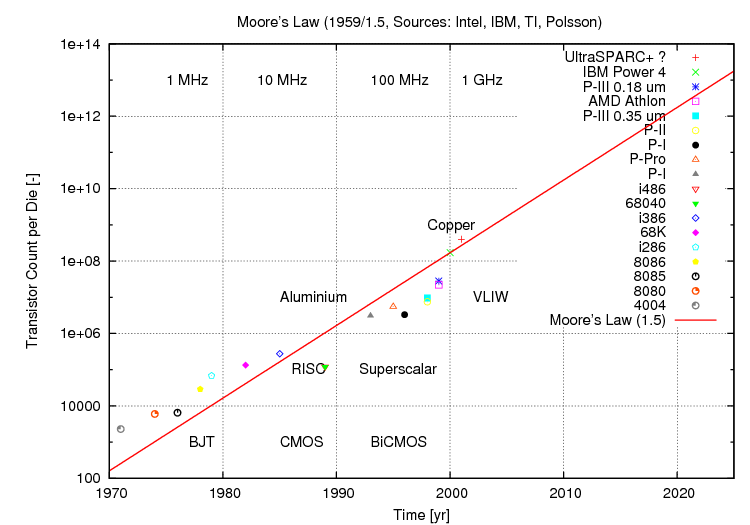

Moore's LawGordon Moore's empirical formula relating the density of electronic circuits to time was defined during the 1960s, and is now a widely accepted measure of technology growth. With five decades of empirical data available, the growth law is well validated1415. The essential thesis of Moore's Law is that “the number of transistors which can be manufactured on a single die will double every 18 months.” The starting point for this exponential growth curve is the period during which the first Silicon planar epitaxial transistors were designed and tested, around 1959 to 1962. Moore's Law can be generally applied to all devices using planar monolithic fabrication technologies, which encompass general purpose Central Processing Unit (CPU) chips, specialised processing chips such as Graphics Processing Units (GPU) and signal processor chips, as well as Static and Dynamic Random Access Memory (SRAM / DRAM / SDRAM), Non-Volatile Random Access Memory (NVRAM), and electrically erasable or Flash Memory chips. While Moore's Law provides a direct measure of capability in memory chips, as size is the primary measure of worth in such, it is only an indirect measure of performance in processing chips. This behaviour arises for two important reasons. The switching speed of transistors in high density chips is critical to circuit performance. Mead observes that electronic switching circuit clock frequencies scale with the ratio of geometry sizes, as compared to transistor counts on a chip which scale with the square of the ratio of geometry sizes. So clock frequency in a processor chip can be related to density, and in turn to time16. The first caveat is that Moore's Law for clock frequencies must include a scaling factor, and importantly, clock frequency for a complex chip design also depends on other factors, especially the wiring design on the chip and the internal architecture of the processor17. The second caveat for the use of Moore's Law in estimating performance gains in CPUs, GPUs and signal processing chips is that actual computational throughput depends strongly on the internal architectural design of the processor, not simply the frequency at which it can be clocked. For “like” internal architectures, Moore's Law does indeed provide a direct measure of performance gain. A Pentium chip with a given internal die (chip) design, if fabricated with smaller transistor geometry, will produce an improvement in computational throughput which is directly proportional to the improvement in clock frequency, for computational applications which are “compute bound”, where processor performance dominates over memory bandwidth or mass storage bandwidth. Conversely, comparisons between chips which differ significantly in both internal architectures and densities or clock frequencies, can yield misleading results. Producing a variant of a 1970s microprocessor which could be clocked at 3 GigaHertz will still yield inferior computing performance to a contemporary 3 GigaHertz clock frequency chip, due to the inferior internal architecture of the older chip design. What is frequently overlooked, is that major changes in internal architecture can produce important performance gains, at an unchanged clock frequency. As a result, the actual performance growth observed in processing chips over time has been typically much greater than that conferred by clock frequency gains alone. Higher transistor counts allow for more elaborate internal architectures, thereby coupling performance gains to the exponential growth in transistor counts, in a manner which is not easily scaled like clock frequencies, and more than often difficult to exactly predict through modelling or analytical means. A worthwhile observation is that contemporary commodity microprocessor chips are, in terms of their internal architectures, much closer in design to 1970s mainframe computers and supercomputers, than microprocessors of that period. Some chip architectures, such as GPUs, are fundamentally different to their historical predecessors. Over the last several decades there have been repeated claims that Moore's Law would not persist longer term. The material reality is that quantum physics will eventually impose hard limits on how small switching devices can be made. To date the limitations of photolithographic technology, and the electrical performance of on-chip wiring connections have had a stronger impact on achievable density and especially clock frequencies. The most recent industry trend, where difficulties have arisen with achievable clock frequencies in processors, has been to employ parallel processing technology, usually marketed as “multicore” processors. |

|||||||||||||||||||||||||||||||||||||||

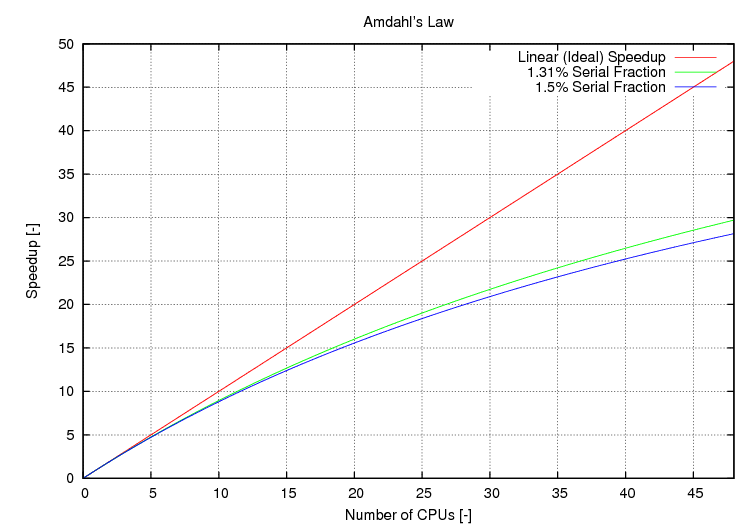

Figure 10. Amdahl's law for

a

multiprocessing computer system. Even a very small serial component

significantly impairs achievable parallelism (Author).

|

|||||||||||||||||||||||||||||||||||||||

Amdahl's LawParallel processing techniques were first employed in mainframe computers and supercomputers during the 1960s, as a means of overcoming clock speed limitations arising from transistor sizes in period monolithic integrated circuit chips. Contemporary “multicore” commodity processing chips are parallel processors, in which each “core” is a general purpose CPU, and two, four or six such CPUs are fabricated on a single Silicon die, sharing common hardware such as cache memory, memory management, and bus interfaces. Computational load in such a machine is shared in some fashion between these multiple processors. The most widely used estimator of parallel processing performance gains is Amdahl's Law, defined in 1966: Speedup = (s + p ) / (s + p / N ) = 1 / (s

+ p / N )

where s and p are the serial and parallel time fractions, respectively18. The important conclusion which falls out of Amdahl's work is that performance in parallel systems depends critically upon the problem being computed, as much as the manner in which the parallel processor is constructed. Problems in which computations will stall when waiting for the results of other computations, can perform very poorly on parallel processors. The bounds of performance in parallel systems are the best case of “linear speedup” whereby no internal computational dependencies exist between parts of the problem, and where performance of the parallel processing system scales directly with the number of added CPUs, and the worst case, where so many dependencies exist in the algorithm being computed, that only a single CPU is ever active. Real world problems span the full continuum between these bounds. Contemporary computing practice is seeing, increasingly, the use of parallel processing environments, whether appropriate or not. At one extreme we observe commodity notebook, desktop and server systems shifting to “multicore” chips, at the other extreme we see increasing use of large scale clusters, grids and clouds for commercial and scientific / engineering computing applications. The latter are all forms of distributed parallel processing systems, where large numbers of commodity processors are connected using a high speed network of some type, more than often the Internet. In combat aircraft, the first genuine parallel processing scheme to be architected into a design was the Pave Pillar avionic scheme, developed for Advanced Tactical Fighter (ATF) program and reflected subsequently in the early F-22A Raptor central processing system. Parallel processing technology has since appeared in radar signal and data processing systems, and will become increasingly common in future designs, mostly using commodity or “COTS” processor technology19. The most recent trend in parallel processing has been the use of multiple GPU chips to provide low cost high volume floating point arithmetic. Such systems may employ many hundreds or thousands of parallel execution units, providing orders of magnitude higher floating point computational performance when compared to a commodity “multicore” processor. As the internal architectures of such chips are primarily optimised for graphics computations, significant effort in software is mostly required to exploit the potential performance of the hardware. As with all parallel processing, computational dependencies impose hard constraints on achievable performance20. The contemporary and likely future trend is thus to attempt to overcome performance limitations in individual processors by aggregating large numbers of processors, often with little regard for whether this is the most efficient means of increasing performance. As a result, with some qualification, the exponential growth curve in processing performance is most likely to persist for the foreseeable future. |

|||||||||||||||||||||||||||||||||||||||

|

|||||||||||||||||||||||||||||||||||||||

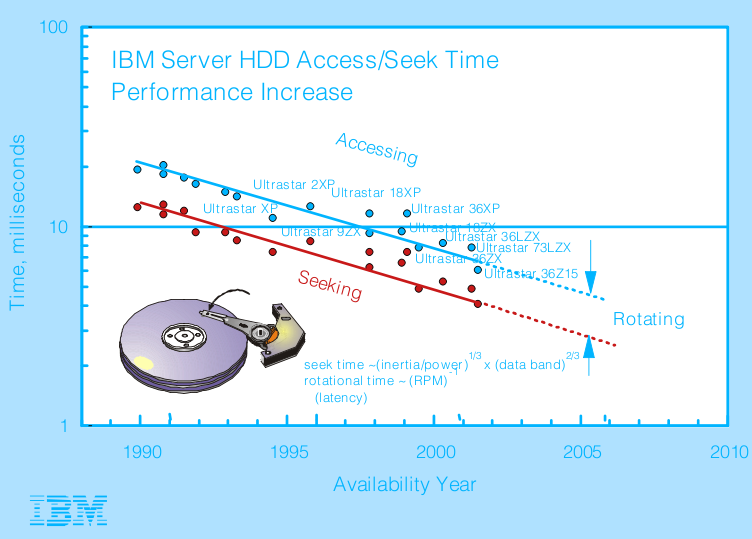

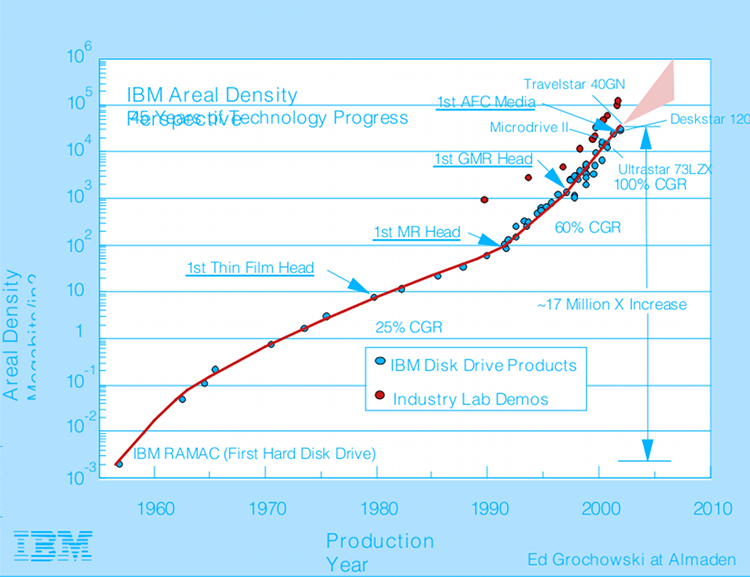

Figure 11. Evolution of

rotating

mass storage access times (above) and storage densities (below). The

exponential growth seen in density is not paralleled by mechanically

constrained access times (IBM).

|

|||||||||||||||||||||||||||||||||||||||

Kryder's Law and Mass Storage TechnologyKryder's Law was defined to estimate exponential growth in the density of rotating magnetic storage devices or “hard disks”, in a manner analogous to Moore's Law. It is sometimes labelled “Moore's Law for hard disks”, and usually defined as a “doubling of capacity per dollar” over an 18 month to 24 month period21.Like Moore's law, Kryder's law represents the progressive evolutionary improvement in density, produced by technological improvements in magnetic materials and disk drive heads. Mark Kryder of Seagate defined the law in 2005. While Moore's law has exhibited modest short term perturbations, Kryder's Law has tended to larger perturbations due to the stronger short term impact of technologies such as Giant Magneto-Resistive (GMR) heads. Whereas in monolithic chip fabrication modest increments in density can be achieved by small improvements in technology, in magnetic mass storage a new materials or construction technology may be needed to achieve growth. No differently from Moore's Law, Kryder's Law does not encompass the complete gamut of disk storage performance. It has provided a good predictor in recent years of disk storage capacity growth, and by default data transfer rates to and from disks, which are both determined by storage density. What Kryder's law does not predict is the improvement in disk access times, which are determined by the rotational speed of the disk and movement velocities of disk heads. These have improved little over the last decade, reflecting the reality that access performance is determined by mechanical design, rather than electronics and magnetic materials. In effect, the access mechanism of such disks is a kinematic domain technology. Refer Figure 11. The trend to parallelism observed in processing is emulated by the use of multiple platter disks to improve capacity per drive, but also in the use of RAID (Redundant Array of Inexpensive Disks) technology, where multiple disk drives are operated in parallel to emulate a much larger disk, with a much higher aggregate data transfer rate. Access times in RAID systems reflect the mechanically limited performance of the disk drives in the array. For the foreseeable future, again with qualification, Kryder's law is most likely to hold. It is important to observe that Kryder's law is specific to rotating magnetic storage device technology. Solid state non-volatile mass storage devices which employ electrically erasable Flash Memory technology, sometimes marketed as “Solid State Drives”, obey Moore's law, although recently the growth rate has been well in excess of that observed in CPUs. While considerably more expensive in cost per Gigabyte of storage, Flash Memory is an attractive alternative to rotating storage in embedded avionic applications as it is typically faster to access, but is significantly more robust in high vibration environments and better able to survive a cyclic thermal load. Alternative solid state mass storage technologies are now emerging, and where these are based on monolithic semiconductor processes, will also obey Moore's law22. |

|||||||||||||||||||||||||||||||||||||||

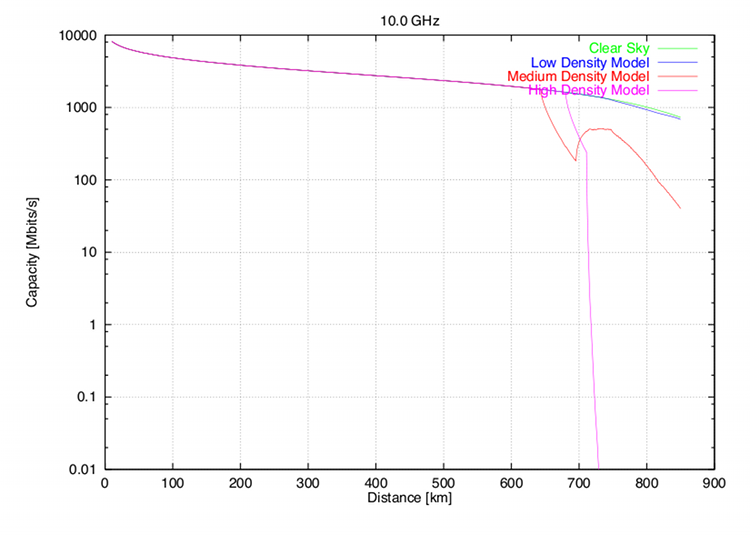

Figure 12. Performance

modelling of a high data rate

communications link using a representative fighter class X-band AESA,

for two stations with phase centres at the tropopause. The capacity

measure is based on Shannon's criterion. Useful range is strongly

dependent upon weather conditions, with four models compared (Author,

1999).

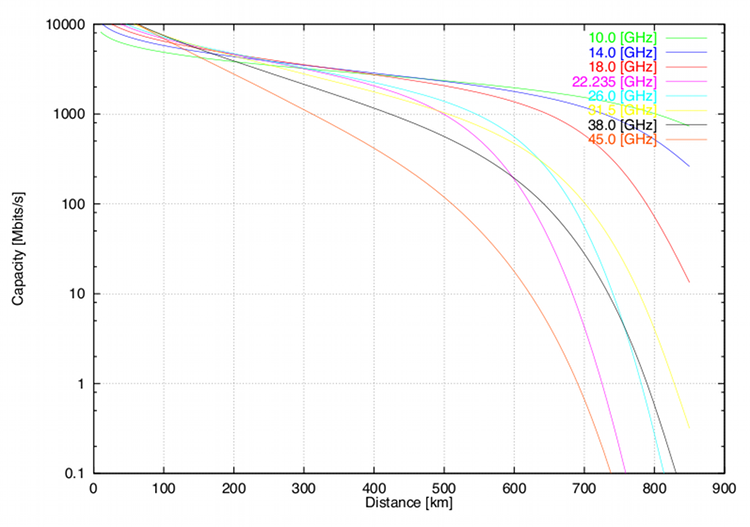

Figure 13. Performance

modelling

of a communications link

using an AESA, for two stations with

phase centres at the tropopause, and operating frequencies between the

X-band and Q-band. The capacity measure is based on

Shannon's criterion. Useful range is strongly dependent upon operating

frequency, due to tropospheric water vapour loss peaking at

22.235

GHz, and the onset of

Oxygen resonance loss at ~60 GHz. Clear sky conditions are assumed

(Author, 1999).

|

|||||||||||||||||||||||||||||||||||||||

Bandwidth LawsNielsen's Law of Internet Bandwidth is defined in 1998 as “a high-end user's connection speed grows by 50% per year”. Edholm's Law of Bandwidth, defined for wireless, nomadic, and wireline Internet connections, asserts that “the three telecommunications categories march almost in lock step: their data rates increase on similar exponential curves, the slower rates trailing the faster ones by a predictable time lag”23. It has been empirically observed that telecommunications bandwidth follows the Moore's Law nominal 18 month doubling period24. The bandwidth laws reflect evolutionary growth across a range of different technologies, but particularly optical fibre technology and Gallium Arsenide Monolithic Microwave Integrated Circuits (MMIC)25. As with Moore's Law and Kryder's Law, the bandwidth laws are constrained by basic physics and Shannon's information theory. This is especially important when considering the impact of the bandwidth laws in assessing growth in radiofrequency wireless channels, whether these are commodity consumer wireless networking schemes like WiFi and WiMax, or more specialised military radio datalinks like JTIDS/MIDS or JTRS. Two critical constraints apply to growth in wireless network bandwidth, and neither can be either dismissed or easily avoided. The first of these is the “power-aperture product” problem, whereby the achievable range and data rate of a radio link is limited by the combined effects of transmitter power, antenna gain, receiver sensitivity and the inverse square law of radio propagation loss. This sets hard limits on how many bits per second can be sent between two devices at some distance. This is further exacerbated by radio propagation effects such as absorption, refraction and especially fading26. Shannon's capacity theorem states that the achievable data rate through a channel can be manipulated by trading channel bandwidth and transmitted power, all else being equal. This is indeed the mathematical basis underpinning modern spread spectrum techniques. Unfortunately, congestion of the radiofrequency spectrum is increasingly a severe operational constraint27. An additional consideration is that in military radio-frequency datalinks, Low Probability of Intercept (LPI) characteristics are of increasing importance. LPI characteristics are typically achieved by the use of low power emissions, and link capacity is usually sacrificed to make the signal difficult to detect and demodulate by unwanted parties. Significant pressures will arise as a result of the exponentially growing gap between the internal bandwidth of sensor systems, which is exhibiting exponential growth properties due to the increasing use of exponentially growing computing and optical fibre technology, and constrained growth of radiofrequency datalink technology. Good examples include high resolution Synthetic Aperture Radar (SAR) systems and very high definition optical imaging systems, both of which at this time can collect data at rates in excess of 100 Megabytes/s, while the fastest operational datalinks such as CDL and TCDL are limited to 274 Megabits/s and 1,096 Megabits/s respectively28. A promising technological strategy which can increase available capacity for datalinks is the use of high power aperture Active Electronically Steered Array (AESA) antennas, discussed further. Switched beam AESA technology is already employed in the low power Ku-band Multifunction Airborne Data Link (MADL), to provide steerable pencil beam links between aircraft, the intent being to minimise geometrical opportunities for intercept. A high power-aperture AESA such as an X-band multimode radar antenna offers the same advantage of precise directional beam control, but with mainlobe widths typically between 1.5° and 5°, and significantly more power and aperture gain compared to specialised datalink antennas. Exploration of the fundamental performance bounds of such AESAs performed in 1999, using the measure of Shannon channel capacity, indicates that exceptional data transmission rates and ranges are achievable using representative AESA parameters, including data rates in excess of 2 Gigabits/s, refer Figures 12 and 13. Subsequent effort in 2006 by L-3 Communications, Lockheed Martin and Northrop Grumman using a modified AN/APG-77 radar demonstrated 1.096 Gigabits/s data rates. While the use of LPI waveforms and lower power ratings would reduce AESA link capacity, this yet to be exploited technology still presents an opportunity to advance well beyond the limitations of non-directional antennas, but importantly is also still constrained by the physics of apertures and radio-frequency propagation2930. In summary, while the Bandwidth Laws reflect the same exponential growth relationships in well behaved transmission media like optical fibre cables, they break down rapidly in radiofrequency wireless systems, where antenna power-aperture, radio propagation effects, and spectral congestion dominate achievable bandwidth. The result of this is an exponentially growing gap between achievable internal bandwidth in sensors and avionic suites, when compared to achievable bandwidth in radio-frequency datalinks employed for networking. |

|||||||||||||||||||||||||||||||||||||||

Figure 14. Focal Plane Array imaging devices display exponential growth, although doubling rates are more sedate than in other planar technology semiconductor devices, of the order of ~4.5 years (Author, 2010). |

|||||||||||||||||||||||||||||||||||||||

Moore's Law in Focal Plane ArraysFocal Plane Array chips used for optical imaging, such as CCD (Charged Coupled Device) or CMOS (Complementary Metal Oxide Semiconductor) chips used in still and motion camera equipment, bandgap and QWIP (Quantum Well Imaging Photodetector) thermal imaging devices, webcams, or cellular telephones, are fabricated using the same basic processes as other commodity chips. Prima facie, it could be argued that these devices should obey Moore's Law. Empirical study (Figure 14.) of pixel counts, a measure of photosite density in commercial camera imaging sensors, shows a doubling period between four and five years, much slower than that observed in CPUs, GPUs, DRAMs and other semiconductor devices fabricated using much the same processes. Unlike CPUs, GPUs, DRAMs and other commodity computing devices, imaging chips are constrained in design by factors other than photolithographically achievable photosite density, as their imaging performance depends on the number of photons each photosite can capture with acceptable signal to noise ratio performance. Another important limitation is the readout time, which increases at a given clock speed in proportion to the number of imaging photosites on the chip. While multiple readout interfaces are frequently used, this remains a major obstacle to photosite count growth. Further constraints such as compatibility with existing film camera lenses are a major factor driving the commercial market, to the extent that many imaging chips have been specifically designed to match the frame sizes of legacy wet film media, such as the 120/220 or 35 mm standards. The resulting large die sizes impact production yields, in turn impacting production costs and volumes, and as a result the doubling period is much greater than for CMOS or NMOS products which are more conventional in design. Regardless of market constraints, the exponential growth trend is well established, and many of these chips now match or outperform legacy military imaging chips previously developed for military photoreconnaissance framing cameras. Some commercially developed imaging chips have been integrated into military equipment. Military infrared band thermal imaging chips have displayed a much slower rate of growth in resolution, reflecting fundamental limits in diffraction at the sensor plane, as well as slow learning curves in low volume manufacture, using often exotic materials and processes. Imperatives such as providing concurrent imaging in multiple infrared bands have often displaced pixel count as the driver of development investment effort3132. An interesting recent development has been the design of large scale parallel imaging sensors, which aggregate dozens or hundreds of CCD imaging devices into a single system, intended to provide high density imaging coverage of large areas. In a very basic sense this reflects the trend to parallelism observed in CPU/GPU technology, where parallelism is employed to overcome the performance limitations of individual devices. The Argus-IS system presents an interesting case study33. Hyperspectral sensors are another very useful area of imaging sensor technology which will benefit strongly from exponential growth in focal plane arrays, be these visible band or infrared. To date the cost of hyperspectral imaging sensors, both pushbroom and scanning types, has been high due to the demand for low production volume sensors, and the prodigious demand for sensor bandwidth, fast mass storage, and numerically intensive processing required. Exponential growth in the basic technologies used to construct hyperspectral sensors and provide processing will be an enabler for near term future growth. As a case study, the exponential growth in imaging chips is valuable, as it is the only major sensor category where Moore's Law effects collide directly with the physics of sensor apertures, and thus it represents a special case, compared to other sensor technologies. |

|||||||||||||||||||||||||||||||||||||||

System Integration LawDefined

a half decade ago by researchers at Georgia Tech in the United

States, the “System Integration Law” is intended to reflect

higher than Moore's Law growth arising from advances in high density

electronic component packaging rather than density increases in the

monolithic electronic components being packaged. The law was framed

in the context of the “More

than

Moore's

Law”

movement34.

Extremely high density electronics packaging presents formidable engineering challenges, not only in providing desired electrical performance, but especially in thermal management, as heat dissipation remains a major challenge in all all high density electronics. |

|||||||||||||||||||||||||||||||||||||||

Moore's Law in Monolithic Microwave Integrated CircuitsMuch of the exceptional technology growth observed in military and commercial radio-frequency electronic hardware over the last two decades is a direct result of the development of repeatable manufacturing processes for Monolithic Microwave Integrated Circuits (MMICs) using GaAs centred technologies. Silicon, the mainstay of computing chip manufacturing, lacks the electron mobility necessary to construct high performance radio frequency transistors for operation in the mid and upper microwave frequency bands. Integrated circuits on a chip using GaAs were a “holy grail” technology three decades ago, and formidable challenges had to be overcome to bring the technology to maturity. Like the Internet, GaAs fabrication research was heavily funded by DARPA, yet resulted in massive commercial exploitation once viable. Within the first decade of GaAs MMIC manufacture, the total fraction of the military market for such products was only a small percentage of the global total25.

Density growth over time in MMICs faces challenges often very different to those seen in commodity and specialised computing chips, as radio-frequency design rules apply to active and passive components, but especially wiring interconnections within the MMIC. Electrical impedance matching and bandwidth requirements of interconnections between the MMIC and external electrical circuits can and often do consume large portions of the real estate on the chip. More than often only a small fraction of the MMIC area can exploit Moore's Law driven gains in transistor and passive component density. The inferior heat conduction performance of GaAs compared to Silicon has also impacted achievable densities. The limitations of basic GaAs materials have resulted in a sustained and intensive industry effort to develop alternative materials capable of operating at higher frequencies and high thermal loads, as well as a parallel effort to adapt CMOS Silicon technology for some radio-frequency applications35. Gallium Nitride (GaN) and Silicon Carbide (SiC) materials offer the potential to fabricate transistors which have up to two orders of magnitude better power handling capability than GaAs, permitting in turn corresponding gains in component performance for applications such as radar or radio-frequency networking36. While the exceptional density growth observed in Silicon computing chips may never be observed in radio-frequency MMICs, the density growth relative to legacy discrete component technologies will be exponential. |

|||||||||||||||||||||||||||||||||||||||

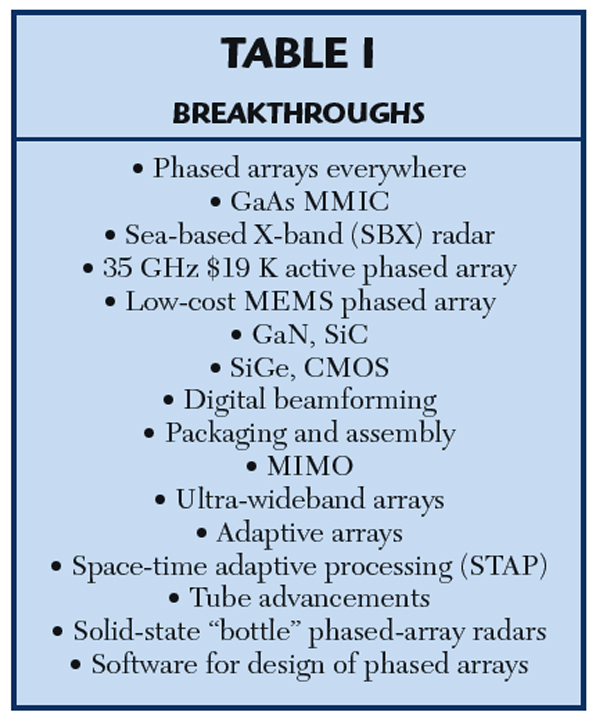

Evolutionary Growth in AperturesApertures, comprising antennas in radio-frequency systems, and lenses and mirrors in optical systems, are critical components of military avionic suites, whether employed for sensors, communications and networking, or more recently, for Directed Energy Weapon (DEW) applications. The challenges which arise from the need to control radio-frequency and optical band signatures to meet design objectives in stealthiness, as well as traditional imperatives of minimising weight, volume and power dissipation in avionics, have seen a slow but inevitable to trend to “multifunction apertures” where for instance a radar antenna is employed for its host radar, but also as an aperture for bidirectional high speed digital data transmission, passive interferometric detection of radio-frequency emitters, in-band jamming and disruptive radio-frequency DEW applications37. In optical systems a parallel trend to share an aperture for multiple uses is well established. Many electro-optical targeting systems share common windows or stabilised mirror systems for thermal imager, visible band imager, laser rangefinder and passive laser spot tracker subsystems. Laser DEWs frequently employ a common primary aperture for the power delivery beam and the offset wavelength beam employed to control power delivery beam wavefront shaping38. While shared multifunction apertures are desirable from a systems integration perspective, both in terms of meeting observables performance objectives and minimising weight and volume, the design of such can present difficult challenges as a result of strongly divergent design objectives for the various functions or subsystems which share the aperture. Structural mode RCS, aperture bandwidth and power handling performance are exactly such constraints, which present as common problems in both radio-frequency and optical band apertures.  Table 4: AESA technology breakthroughs (Brookner, 2008). |

|||||||||||||||||||||||||||||||||||||||

Active Electronically Steered ArraysElectronically Steered Array (ESA) or “phased-array” antenna technology entered operational use in 1940 when the Luftwaffe deployed its first GEMA Mammut VHF-band acquisition radars. Until a decade ago, ESA technology was mostly confined to surface based acquisition, early warning and Surface-Air-Missile engagement radars, primarily due to weight and volume39. The first Passive ESA (PESA) fighter radar to be mass produced was the Soviet low X-band Tikhomirov NIIP N007 Zaslon or Flash Dance, which employed a 1.1 metre array with 1700 X-band phase elements, with an embedded 64 element L-band IFF array. This radar was so large it was only ever fitted to the 100,000 lb gross weight class MiG-31 Foxhound interceptor, built from the mid 1980s40. The attraction in all ESA radars is agility in beam steering, beam shaping, and the potential for forming nulls or multiple beams, all at update frequencies of up to kiloHertz. This permits enormous functional flexibility, both in terms of dynamically adapting antenna characteristics during the operation of different modes, but also in terms of interleaving different modes to in effect multiplex the radar between different tasks, creating the illusion of concurrency to the user. In a sense an ESA radar provides a form of parallelism, albeit by multiplexing a single electronically steered antenna. The Active Electronically Steered Array (AESA) is now the dominant technology in new build fighter, strike and multimode radars of United States and European origin, and in Russian designs about to enter production. An AESA or “active phased array” embeds a passive radiating and matching element, a low noise receiver, a solid state transmitter of several Watts or tens of Watts power rating, a phase or time delay beam steering control element, a gain control element, and support electronics, into each and every Transmit-Receive (TR) Module forming the array. The backplane of the AESA provides RF signal distribution, and often passive feed networks for monopulse and MTI operating modes. Beam steering software for antenna control is often embedded in the radar data processing system. The reliability of current AESA based antennas is one to two orders of magnitude better than the reliability of the TWT transmitter and planar array antenna technology being replaced. The GaAs MMIC was the enabling basic technology for airborne X-band and Ku-band AESA radars. The principal design challenges in current AESA designs remain in achievable bandwidth, TR module radio-frequency power output, and array cooling. Liquid cooling remains the preferred technique. AESAs could be described without overstatement as a revolutionary technology in fighter radar, overcoming limitations in beamsteering and beamforming agility, antenna bandwidth, antenna sidelobe performance, receive sensitivity and operational reliability in legacy radar designs. Moreover their compact fixed design permits installation in much smaller volumes than required for mechanically steered antennas, with eventual potential for conformal installations. The flexibility inherent in AESAs makes them the ideal technology for multifunction radio-frequency band apertures. The combined effect of Moore's Law driven growth in computing power and MMIC based AESA technology has been exponential growth in the number of different modes and functions an AESA radar can perform, over the last decade. That exponential growth is not paralleled in detection range performance, which is constrained by radar power-aperture product, AESA power conversion efficiency, airframe power generation and especially cooling capacity, the inverse fourth power law, and tropospheric radiofrequency propagation effects. Bandwidth is another area where exponential growth has not occurred, and where hard limits arise due to fixed aperture sizes, and genuine challenges in the analogue design of wideband radiating elements and AESA backplane analogue feed networks. Brookner's excellent AESA technology survey, published in 2008, provides a robust roadmap of basic technology developments which will impact radio-frequency aperture designs over the coming two decades35. |

|||||||||||||||||||||||||||||||||||||||

Digital Beamforming and Adaptive ArraysAnalogue beamforming technology currently dominates AESA designs, and has the principal limitation that at any time the antenna can form only one beam, the shape and direction of which is determined by the phase or delay setting applied to the single phase or delay control element in each TR module. While the addition of multiple parallel phase or delay control elements would permit multiple beams to be formed concurrently, volumetric and density limits in TR module construction, and density limits in feed networks, would impose hard constraints on the level of concurrency which is achievable.Digital BeamForming (DBF) involves a fundamentally different architecture to established AESAs, in that individual array elements (TR modules) or subarrays of elements each include Intermediate Frequency receiver chains, and Digital to Analogue converters. Rather than combining analogue radio-frequency signals in a feed network, with gain and phase/delay weightings manipulated at radio frequencies, a DBF design transfers digital outputs from each element or subarray into a signal and data processing system which performs the weighted summations in software. The level of concurrency achievable is then limited only by the computational performance of the processor system. Multiple concurrent beams may be thus formed, each with unique characteristics for the intended application. An important advantage of the DBF approach is that the bottleneck in bandwidth which arises in the antenna backplane is removed. The feed network is replaced by digital bussing, which while challenging to design well, is not as critical in analogue performance demands as a radio-frequency feed network. Currently DBF techniques are confined to the receiver path, but this does not preclude a digital transmit path up to a Digital to Analogue converter in the TR module, thus eliminating analogue feed technology altogether. Adaptive arrays are an outgrowth from 1960s SideLobe Canceller (SLC) technology, where a separate antenna was employed to capture surface clutter backscatter and/or jammer signals, which were then subtracted from mainlobe signals to effect clutter and jammer rejection. In an adaptive array, a mainlobe is formed with embedded nulls which are pointed at unwanted signal sources, thus rejecting these within the antenna mainlobe itself35. DBF and adaptive array technology were first employed in mass production jam resistant GPS receiver antennas, operating in the L-band, with very modest element counts, a necessity given the -160 dBm signal levels employed. This technology was adopted since it was the only technique available which permitted concurrent beamforming to place multiple mainlobes on multiple satellites, while placing multiple antenna nulls on multiple hostile jammers41. Brookner details the advantages of DBF thus29: “Using DBF eliminates the analog combining hardware, analog down-converting and all the errors associated with them. This in turn will lead to ultra-low side lobes. It will allow the implementation of multiple [receive] beams pointing in different directions. It will enable the adaptive use of different parts of the antenna for different applications at the same time.” “At the same time, the search angle accuracy is improved by about 40 percent. DBF will also permit better adaptive-array processing.”42 Moore's Law driven computing performance growth and MMIC technology are the enablers for DBF and adaptive array techniques in AESA apertures. They will also be determinants of what capability in DBF and adaptive array techniques can be incorporated into a radar design at any given time in the foreseeable future. The gains in radar performance detailed by Brookner are important advances in their own right, but the concurrency provided by DBF and adaptive array techniques yield important gains where the aperture is being shared between radar functions and functions such as datalinking, radio-frequency passive surveillance and geolocation, and jamming of emitters. Space Time Adaptive ProcessingSpace Time Adaptive Processing (STAP) techniques are employed to adaptively reject surface clutter by placing a null on the clutter source. The technique is used on the United States E-2D AN/APY-9 radar and the Russian 1L119 Nebo SVU and Nebo M radars354344. The primary enabling technology for STAP is Moore's law driven computing power. |

|||||||||||||||||||||||||||||||||||||||

Sensor FusionSensor fusion techniques are enabled primarily by Moore's law driven computing power, and the availability of high capacity datalinks and networks which permit realtime or near-realtime collection of sensor output data to permit fusion to be performed. Sensor fusion can be performed using like sensors, such as multiple radars of like or different configuration, or by using dissimilar sensors, such as passive radio-frequency sensors, radar and optical sensors. This may be performed on a single platform equipped with multiple sensors, or by fusing sensor outputs from multiple platforms. The benefit arising from well performing sensor fusion is that it can overcome the specific limitations of particular sensors, thus improving confidence in target identification but also track quality. This is especially important in an environment where effort is being made to control or significantly reduce platform signatures in multiple bands. Examples of sensor fusion systems include the US Navy Cooperative Engagement Capability (CEC) systems, or Russian designs such as the Poima or NNIIRT Nebo M system45. With the continuing proliferation of digital networking technology and exponential growth in computing power, the trend will inevitably be that of increasing capability and use of sensor fusion techniques. |

|||||||||||||||||||||||||||||||||||||||

Augmented Cognition SystemsThe best known example of an Augmented Cognition System (ACS) is the 1980s demonstration by the AFRL of the “Pilot's Associate” system, initially intended for the Advanced Tactical Fighter (ATF) program. Cite46: “The Pilot's Associate program, a joint effort of the Defense Advanced Research Projects Agency (DARPA) and the US Air Force to build a cooperative, knowledge-based system to help pilots make decisions is described, and the lessons learned are examined. The Pilot's Associate concept developed as a set of cooperating, knowledge-based subsystems: two assessor and two planning subsystems, and a pilot interface. The two assessors, situation assessment and system status, determine the state of the outside world and the aircraft systems, respectively. The two planners, tactics planner and mission planner, react to the dynamic environment by responding to immediate threats and their effects on the prebriefed mission plan. The pilot-vehicle interface subsystem provides the critical connection between the pilot and the rest of the system. The focus is on the air-to-air subsystems.” An ACS system could thus be described as an adjunct to a sensor suite, the intent of which is to accelerate interpretation of the outputs of onboard and offboard sensors, and thus accelerate the Observation-Orientation phases in Boyd's OODA Loop47. The principal challenge in implementing ACS techniques is that they can be very computationally intensive, which presented a major obstacle two decades ago in embedded applications such as combat aircraft. With exponential growth in computing performance this will no longer be true, and as a result, Moore's law driven computing growth is an enabler for ACS technologies. |

|||||||||||||||||||||||||||||||||||||||

Optical SensorsThe impact of Moore's law in optical imaging array technology detailed previously is that optical sensors will see increasing use in combat aircraft, as the cost of Focal Plane Array devices progressively declines, and imaging site counts increase. A good trend indicator is the adoption of spherical Missile Approach Warning System (MAWS) coverage using the AAR-56 MLD in the F-22A Raptor, and the much more ambitious AAQ-37 EO DAS developed for the problematic F-35 program. The full potential of Focal Plane Array technology remains to be realised, due to limitations in array imaging site counts, but also due to the cost demands of cooling for signal to noise ratio control, the cost of sensor angular stabilisation, limited readout rates and the immaturity of algorithms for fusing outputs from multiple imaging sensors. A problem which persists could be described as the “f-number tradeoff”, in that good long range detection performance requires large optics to gather light and a narrow instantaneous Field Of View (FOV), which is at odds with the concurrent need for wide angle coverage with multiple sensors. This is in a sense not unlike the conflicting radar antenna mainlobe needs of search regimes, against tracking regimes. Exponential growth in Focal Plane Array sensors and supporting computing capabilities will enable significantly better capabilities over the coming decade, while the driving imperative for this growth will be that of overcoming the effects of radio-frequency signature reduction in opposing platforms. |

|||||||||||||||||||||||||||||||||||||||

Exponential Growth Laws vs Apertures Figure 15.

As the preceding discussion shows, exponential growth across a range of basic technologies has yielded very important gains in the performance and capabilities of information domain technologies reliant upon radio-frequency and optical apertures. What is also apparent is that many fundamental performance bounds are set by the physics of the aperture proper, and the properties of electromagnetic wave propagation in the atmosphere, especially the troposphere. Refer Figure 15. This is an important consideration, in that exponential growth is not a feature of the cardinal performance parameters in apertures, whether these are employed for sensors, communications, or both. The expectation that this might be the case, sometimes observed in media and lay discussion of technological evolution in the information domain, is contrary to nature and thus quite unrealistic. |

|||||||||||||||||||||||||||||||||||||||

Evolutionary Growth in Stealth Techniques Figure 16. Prototype of new Chengdu developed Very Low Observable fighter aircraft photographed in December, 2010. This design displays advanced shaping, following design rules employed in the F-22A Raptor (Chinese Internet). Stealth designers have two principal technologies available for reducing the radar signature of an aircraft. These are shaping of airframe features and materials technology applied in coatings or absorbent structures48. Typically, the first 100- to 1,000-fold reduction in signature is produced by shaping, with further 10- to 30-fold reductions produced by materials. The smart application of these techniques reduces the signature of a B–52-sized B–2A Spirit down to that of a small bird, from key aspects. Radar signatures are typically quantified as Radar Cross Section, which is specific for a given aspect and operating wavelength. The effectiveness of both shaping and materials technologies varies strongly with the wavelength or frequency of the threat radar in question. Shaping features must be physically larger than the wavelength of the radar to be truly effective. A shaping feature with a negligible signature in the centimeter X-band or Ku-band may have a signature that is tenfold or greater in the much lower decimeter and meter radar bands49. Materials are also characteristically less effective as radar wavelength is increased, due not only to the physics of energy loss, but also to the “skin effect” whereby the electromagnetic waves impinging on the surface of an aircraft penetrate into or through the coating materials. Known techniques include the application of absorbent surface coatings, or the embedding of absorbent material layers in composite material aircraft skin panels. A material that is highly effective in the centimeter X-band or Ku-band may have a tenfold or less useful effect in the lower decimeter and meter radar bands50. Neither airframe shaping techniques nor materials exhibit exponential growth properties over time. This is because the RCS properties of shapes are determined by basic electromagnetism, and the RCS reduction produced by materials is determined by quantum mechanical and electromagnetic properties of materials. However, the development of both shaping techniques and materials can benefit from abundant computational power. This is especially true of computational RCS modelling techniques which lend themselves to parametric computation in large parallel computing systems. Computational modelling of airframe and component RCS for given shapes and materials can be accelerated very significantly through the application of computational clusters or grids, thereby accelerating the design cycle. Minimising the structural mode RCS of sensor apertures has been and remains a major design challenge, therefore faster computational modelling techniques can produce a beneficial impact in development cycle timelines.  Figure

17. The PAK-FA first prototype displays refined

stealth shaping technology (Sukhoi image).

|

|||||||||||||||||||||||||||||||||||||||

SoftwareSoftware has been and will continue to be a major challenge in the development of combat aircraft, and their progressive evolution through their operational life cycle. While many of these difficulties reflect the direct impact of Moore's law and very short computer hardware lifecycles, which result in early hardware component obsolescence and replacement with the concomitant need for software modification, many of these difficulties reflect more than often poor design in software architecture, poor choices in technology, and inadequate understanding of the system for which the software is being architected51. The most fundamental technological challenge is that contemporary and future combat aircraft systems are from a computational perspective, “hard realtime” systems in which highly parallel computing hardware must be employed52. While the theory of hard realtime scheduling on single processor systems is mature and well understood, the theoretical area of hard realtime scheduling on multiprocessing systems is immature and remains an area of active academic research53. The principal difficulty which arises in large and highly parallel “hard realtime” systems is that not all computational tasks will necessarily complete in a fixed time duration, which immensely complicates the scheduling problem in a “hard realtime” environment. Algorithms can be broadly divided into those which are “deterministic” in the sense that for some given input data, they always take the same time to compute on a given processor type, and those that are “non-deterministic” in that computational times vary given the same input data. A deterministic algorithm which is provided with same type of input over and over again will repeatedly compute in the same length of time. This is characteristic of many algorithms used in sensor signal processing, navigation systems, flight control systems and fire control systems. Scheduling such algorithms on a large multiprocessing system may often be difficult, but the unchanging execution time makes such tasks feasible in a “hard realtime” environment. A much more serious problem may arise with many algorithms which are employed in data processing, sensor fusion, image extraction/analysis and artificial intelligence, where execution time for the task varies widely, presenting often intractable challenges in meeting “hard realtime” timing deadlines, and thus scheduling these. Some are simply “non-deterministic” introducing inherent unpredictability in execution times. Other “deterministic” algorithms will vary unpredictably in execution times with unpredictably varying data inputs. A good case study of the latter problem is the tracking of large numbers of targets, each target being individually tracked by a Kalman filter. While Kalman filters are mature, efficient, well behaved, and computed using a series of matrix operations, each additional target requires that an additional Kalman filter be executed. As a result, a task which is intended to update the positions of multiple targets will require an execution time mostly proportional to the number of targets. Numerous other instances exist presenting much the same challenges in meeting a “hard realtime” timing requirement. |

|||||||||||||||||||||||||||||||||||||||

Large multiprocessing computational systems, in which hundreds or even thousands of like or dissimilar processors are employed will present additional difficulties in achieving predictable timing behaviour in the operating system software and machine hardware, due to queueing effects in buffers and hardware bussing interconnects in such systems, or queueing effects in interprocess communications software, avionic multiplex busses, and external networks. As caching techniques are frequently employed internally in processor hardware to improve performance, these can also introduce non-deterministic timing behaviour into the computational system. Other “hidden” causes of unpredictable timing behaviour may include runtime environments, which often include garbage collection algorithms for in-process memory management, or other runtime environment management mechanisms with highly variable timing behaviour. The complexity of such systems reflects the realities of attempting to execute hundreds or even thousands of concurrent computational tasks, of which a large proportion will be subject to hard realtime performance constraints, and rigid mutual synchronisation requirements. Partitioning computational activities across multiple processors, where a single processor cannot meet a hard realtime performance constraint, remains a challenging problem, and for some algorithms is simply infeasible. To these challenges must be added the human factor in software development. Numerous case studies show that programmer errors continue to be common, and understanding the behaviour of subsystems for which software is being developed can often be inadequate. A common problem observed is that personnel who understand hard realtime design well are scarce, as this topical area is no longer taught in most universities providing computing and electrical engineering education, and is inherently difficult intellectually. It is not an understatement to observe that software technology and technique suitable for large heterogeneous multiprocessing computer systems with hard realtime performance constraints is lagging well behind the exponentially growing performance of hardware. |

|||||||||||||||||||||||||||||||||||||||